To guarantee ethical use of AI-generated synthetic voices, you should prioritize strict consent protocols, obtaining explicit permission before creating or sharing such voices. Transparency about how voice data is stored and used is essential, along with implementing safeguards like watermarking or digital signatures. Legal frameworks and ongoing oversight can help prevent misuse like identity theft or deepfakes. If you’re interested in safeguarding ethics and trust, continuing will reveal effective strategies to manage these emerging challenges.

Key Takeaways

- Implement explicit consent protocols before creating or sharing synthetic voices to protect individual rights.

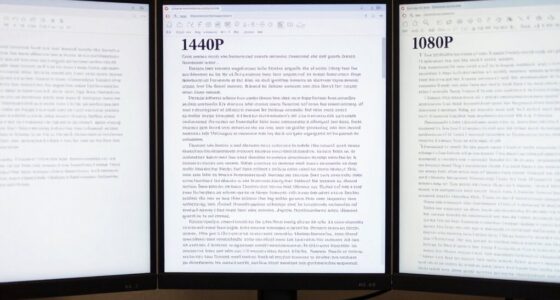

- Use technological safeguards like watermarking and digital signatures to prevent misuse and verify authenticity.

- Promote transparency regarding voice data usage, storage, and potential risks to build user trust.

- Establish legal frameworks that define acceptable applications and penalize malicious activities.

- Ensure ongoing oversight and regulation to uphold ethical standards and prevent harm from voice cloning technologies.

AI-generated synthetic voices are transforming how we communicate, offering realistic and customizable speech options for various applications. This technology, driven by advances in voice cloning, allows for the creation of voices that closely mimic real human speech. While this opens up exciting possibilities—like personalized assistants, voiceovers, and accessibility tools—it also raises significant ethical concerns. One of the most critical issues involves guaranteeing that voice cloning is used responsibly, respecting individuals’ rights and privacy. That’s where consent protocols become essential. These protocols act as safeguards, requiring explicit permission before a synthetic voice is created or used. Without clear consent, the technology can be exploited, leading to potential misuse such as identity theft, fraud, or deepfake scenarios.

Implementing strict consent protocols means you need to verify that the person whose voice is being cloned agrees to its use. This process often involves clear, transparent communication about how the voice will be used, stored, and shared. It’s about empowering individuals to make informed decisions and retaining control over their voice data. When you follow these protocols, you help prevent unauthorized voice cloning, reducing the risk of harm and mistrust. The challenge lies in developing standardized and enforceable consent processes that are easy to understand and accessible for everyone. As a user or developer, you should prioritize transparency: inform voice owners about potential risks, how their voice will be protected, and what measures are in place to prevent misuse.

Furthermore, ongoing oversight and regulation are necessary to maintain ethical standards. This includes establishing legal frameworks that clearly define acceptable uses of voice cloning technology and penalties for violations. As someone involved in this field, you should advocate for policies that mandate consent protocols and restrict malicious applications. It’s also vital to incorporate technological safeguards, like watermarking or digital signatures, to identify AI-generated voices and distinguish them from genuine recordings. This way, you help foster trust among users and prevent malicious actors from exploiting synthetic voices.

Additionally, understanding the importance of relationship dynamics and the ethical implications of voice cloning can help guide responsible usage. In essence, ethical safeguards around voice cloning are not just about technical solutions but also about respecting human dignity and rights. By adhering to strict consent protocols and supporting transparent practices, you contribute to a responsible use of AI-generated synthetic voices. This approach ensures that the technology benefits society without infringing on individual privacy or enabling deception. As this field evolves, staying committed to ethical standards will be key to harnessing the full potential of synthetic voices while protecting everyone’s interests.

Frequently Asked Questions

How Can I Verify the Authenticity of a Synthetic Voice?

To verify a synthetic voice’s authenticity, start by looking for voice watermarking, which embeds unique identifiers into the audio. Use specialized tools or services that perform authenticity verification by detecting these watermarks. Be cautious of inconsistencies or irregularities in the voice, such as unnatural pauses or pitch shifts. Combining these methods helps guarantee you’re accurately identifying genuine synthetic voices and preventing deception or misuse.

What Legal Rights Do Voice Owners Have Over Ai-Generated Copies?

You have voice ownership and copyright rights over AI-generated copies of your voice. This means you can control how your voice is used, reproduced, or distributed by others. Laws vary, but generally, you retain rights if your voice is recognizable and unique. Always check local regulations and consider licensing agreements to protect your voice’s use, ensuring your rights are respected and maintained in the digital domain.

Are There International Standards for Synthetic Voice Ethical Use?

Think of the world as a vast mosaic, with each piece representing different standards. Currently, there are no unified international standards for synthetic voice ethical use, making cross border regulations and cultural considerations vital. You must navigate this patchwork carefully, respecting diverse norms and practices. While some efforts aim to harmonize rules, you should stay informed, adapt responsibly, and guarantee your use of synthetic voices honors both legal boundaries and cultural sensitivities worldwide.

How Do Synthetic Voices Impact Privacy and Consent Issues?

You should consider how synthetic voices impact privacy and consent, especially with voice impersonation becoming easier. These technologies can be used without your approval, risking identity theft or misinformation. Implementing strict consent protocols helps protect your rights, ensuring you’re aware of when your voice is used. Staying informed and demanding transparency from creators and users of synthetic voices helps safeguard your privacy and prevents misuse.

What Are the Potential Misuse Scenarios for Ai-Generated Voices?

You should be aware that AI-generated voices can be misused in deepfake scams and impersonation frauds. Criminals might mimic someone’s voice to deceive others, access sensitive information, or commit financial crimes. These scenarios can cause serious harm, so it’s essential to stay cautious. Recognizing these risks helps you understand the importance of implementing safeguards and verifying identities to prevent falling victim to such malicious activities.

Conclusion

As you navigate the world of AI-generated synthetic voices, remember that with great power comes great responsibility. These voices are like double-edged swords—offering incredible possibilities but also posing ethical dilemmas. It’s up to you to advocate for safeguards that protect authenticity and trust. Don’t let the allure of technology obscure you; instead, be the guardian who ensures these voices serve humanity’s best interests, turning innovation into integrity instead of illusion.