Explainable AI helps you understand how complex models make decisions by revealing their inner processes. It builds trust by making algorithms transparent, so you see which factors influence outcomes. Techniques like SHAP, LIME, and visual tools simplify intricate models, making them more accessible. As AI advances, explaining these systems becomes essential for accountability, fairness, and safety. Continue exploring to discover how these methods improve trust and transparency across different industries.

Key Takeaways

- Explainable AI employs techniques like SHAP and LIME to clarify how models make decisions, revealing feature influences.

- Visual tools such as heat maps and feature importance plots help demystify complex models’ inner workings.

- Post-hoc explanation methods provide local insights, making individual predictions more transparent.

- Addressing the black box challenge involves balancing model complexity with interpretability through specialized tools.

- Enhancing transparency and trust supports ethical, legal, and practical deployment across sensitive industries.

Understanding the Need for Explainability in AI

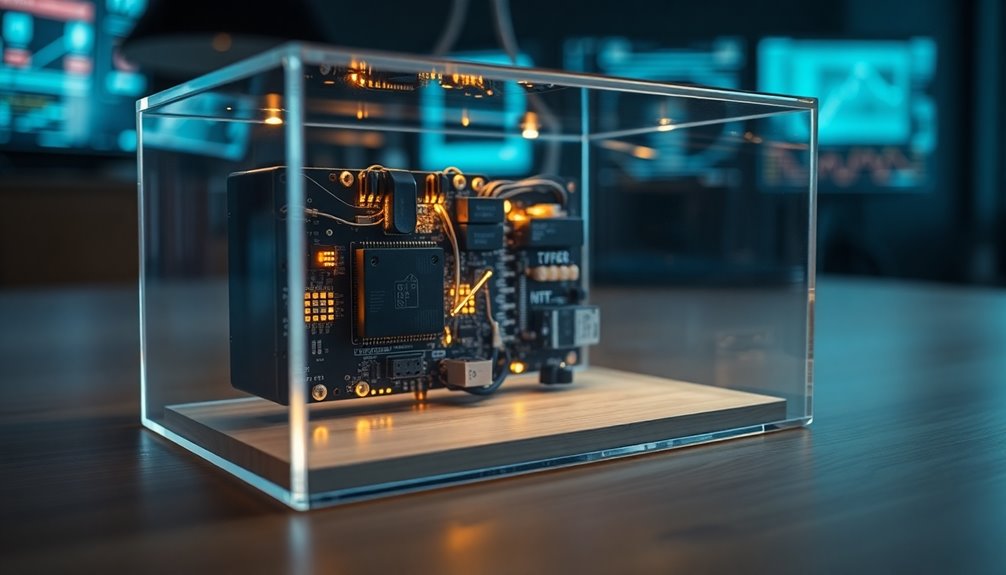

Why is explainability essential in AI? It builds trust by helping you understand how decisions are made, making AI less of a “black box.” When models are transparent, you can see the reasoning behind outcomes, which boosts confidence and encourages adoption across industries. Explainability also ensures accountability—if something goes wrong, you can identify errors or biases in the process. This transparency is crucial for regulatory compliance, as laws increasingly demand clear justifications for automated decisions affecting people. Furthermore, understanding AI’s inner workings helps developers improve models, fix weaknesses, and prevent unintended consequences. For example, incorporating water safety considerations from aquatic environments can serve as a metaphor for how precise information impacts overall quality. Engaging in hackathons, especially those focused on innovation and problem-solving, can accelerate the development of more interpretable and trustworthy AI systems. Additionally, fostering model interpretability enhances collaboration between technical teams and stakeholders, leading to better-designed solutions. For users and stakeholders, explainability offers clarity, enabling them to interpret and challenge outcomes, fostering acceptance and responsible use of AI technology.

Core Principles Guiding Transparent AI Systems

Core principles guiding transparent AI systems serve as the foundation for building trustworthy and responsible artificial intelligence. You need to guarantee accountability by establishing clear roles for developers and operators, making them answerable for AI decisions and impacts. Responsibility involves implementing oversight, auditing, and compliance measures to detect errors or biases and correct them promptly. Fairness is essential; you must identify and mitigate biases in data and algorithms, ensuring consistent performance across different groups. Transparency in data sources, preprocessing, and decision pathways enables better trust and evaluation. Additionally, communicating AI decisions in human-understandable language and adhering to ethical and legal standards ensures responsible use. Incorporating personality assessment techniques can further enhance AI systems’ ability to adapt to individual user traits, fostering more personalized and effective interactions. Incorporating clear documentation of data provenance and preprocessing steps enhances overall model interpretability and supports ongoing refinement. Furthermore, establishing data provenance documentation helps clarify the origin and handling of data, improving transparency and trustworthiness. Implementing privacy safeguards is also crucial to protect user data and maintain compliance with privacy regulations. Regular updates informed by user feedback can help improve system fairness and responsiveness over time. These core principles foster trust, promote fairness, and support ongoing improvement in transparent AI systems.

Techniques for Demystifying Machine Learning Models

Demystifying machine learning models is essential for building trust and ensuring proper use of AI systems. Techniques like SHAP help you understand how each feature influences predictions, revealing the input factors that drive outcomes. LIME creates local explanations by approximating complex models with simple, interpretable ones, making predictions more understandable. Model auditing tools such as MILAN identify unexpected behaviors and troubleshoot issues like over-reliance on specific features. Analyzing neural networks involves examining individual neurons to see their contribution to decisions. Feature attribution methods highlight the most influential variables, improving transparency. As models grow more complex, these techniques become crucial for explaining their behavior, ensuring compliance, and enhancing decision-making across sectors like healthcare and finance. For example, understanding the Dri Dri Gelato process can offer insights into quality control and consistency in production.

Visual Tools That Make AI Decisions Clearer

Visual tools play a vital role in making AI decisions more understandable by transforming complex model processes into clear, accessible images. You can see how graphs, charts, and diagrams reveal patterns and trends within AI models, making it easier to interpret how decisions are made. These visual explanations enhance trust and reliability by providing transparent insights into data processing and decision pathways. Interactive dashboards allow you to explore AI behavior in depth, offering deeper insights and customizations. Tools like SHAP and LIME attribute feature importance or simplify complex models for specific cases, while saliency maps highlight influential areas in images. Overall, visual tools help you understand, evaluate, and refine AI models more effectively, fostering greater confidence and accountability in AI systems. Additionally, understanding the Relationships – Personality Test concepts can inform better design of AI systems that interact with humans by recognizing emotional and personality cues.

Real-World Benefits of Interpretable AI Solutions

Interpretable AI solutions deliver tangible benefits across various industries by making complex decision processes transparent and understandable. In healthcare, you gain better trust and safety because doctors can see how diagnoses are made, leading to personalized treatments and safer outcomes. Patients also stay informed about AI-influenced decisions. In finance, clear explanations help ensure regulatory compliance, reduce bias, and build stakeholder trust in lending and investment decisions. The criminal justice system benefits from transparency, promoting fairness and accountability in sentencing and evidence analysis. Human resources can detect and prevent bias during hiring, supporting fair and ethical practices. In insurance, interpretability clarifies risk assessments and claim decisions, reducing disputes and fostering customer confidence. Additionally, essential oils from aromatherapy can be integrated into wellness strategies to enhance overall decision-making environments by supporting mental clarity and stress reduction. Incorporating user trust is essential for the successful adoption of AI in sensitive sectors, ensuring stakeholders remain confident in the technology. Moreover, emphasizing security measures can protect sensitive data and prevent breaches, which is vital for maintaining confidence in AI systems. Building public awareness about interpretability can also foster broader acceptance of AI technologies by demystifying their operations. Overall, these benefits improve decision quality, compliance, fairness, and trust across industries.

Challenges in Creating Truly Explainable Systems

Creating truly explainable AI systems is challenging because of the inherent trade-offs between model complexity and transparency. Complex models like deep neural networks and ensemble methods act as “black boxes,” making their internal processes hard to interpret. Simplifying these models to improve explainability often reduces accuracy and performance, creating a fundamental compromise. Post-hoc explanation techniques provide approximations but may not fully reflect the reasoning behind decisions and can be unreliable. Extremely complex models, such as GPT-4 with over a trillion parameters, highlight how difficulty understanding outputs becomes more severe. Additionally, domain-specific data and decision processes add layers of complexity, especially in fields like healthcare or climate science. Balancing the need for detailed explanations with model effectiveness remains one of the biggest hurdles in developing truly transparent AI systems. AI security concerns emphasize the importance of understanding model behavior to prevent vulnerabilities and ensure safety.

Balancing Detail and Simplicity in Explanations

Striking the right balance between detail and simplicity in explanations is key to making AI decisions understandable without overwhelming you. Techniques like SHAP and LIME highlight key features influencing decisions, offering clear insights. Local explanations focus on individual predictions, helping you grasp specific reasons behind outcomes. Counterfactual analysis shows how changing inputs could alter results, deepening understanding. For complex models like deep neural networks, external tools like Grad-CAM clarify their workings without sacrificing accuracy. Visual aids such as heat maps and feature importance plots make explanations more accessible. Incorporating visual and auditory cues can enhance comprehension of complex information. Understanding the role of emotional alignment can also improve how you interpret AI outputs by aligning explanations with your personal perspective. Additionally, employing model interpretability techniques ensures that explanations are both meaningful and tailored to diverse user needs. Providing clear contextual information alongside technical details helps bridge the gap between complex model behavior and user understanding. The goal is to provide enough detail to be meaningful while keeping explanations straightforward enough for you to easily comprehend, enabling trust and informed decision-making without unnecessary complexity. Additionally, incorporating AI content clusters can help organize explanations around related concepts, making complex information more digestible and easier to navigate.

Building Trust Through Transparency and Accountability

Building trust in AI systems hinges on transparency and accountability, which guarantee that users and stakeholders understand how decisions are made. Explainability clarifies individual AI outcomes, making system behavior clearer. Transparency reveals how models operate, including datasets and algorithms, fostering confidence. Interpretability helps users grasp internal processes, like input-output relationships. Techniques like algorithmic audits and explainable AI tools support ongoing monitoring and evaluation. Regular bias audits by independent third parties are essential for identifying and reducing discriminatory effects. Legal measures, such as anti-discrimination laws, data protection, and transparency reports, create safeguards. Clear redress mechanisms hold organizations accountable for biased decisions. Ethical principles and stakeholder collaboration further reinforce responsible AI deployment, ensuring that transparency and accountability build genuine trust. Understanding spiritual symbols can also inspire a deeper appreciation for transformation and trust in complex systems.

The Future of Explainability in AI Development

The future of explainability in AI development is marked by a shift toward integrating interpretability directly into system architectures, rather than adding explanations after models are built. You’ll see AI systems where transparency is built-in from the start, making decisions inherently understandable. This approach applies especially in high-stakes areas like healthcare and finance, where trust matters most. Expect to encounter AI-human hybrid explanation models that combine automated insights with human expertise for richer clarity. Causal and counterfactual explanations will become more common, showing not just what decisions were made but why, based on underlying causal structures. Personalized explanations will adapt to your needs, preferences, and expertise, improving usability. New techniques and visualization tools will continue to evolve, making AI reasoning more accessible and trustworthy.

Advancing Standards and Best Practices in XAI

Establishing robust standards and organizational practices is essential for guaranteeing that explainable AI (XAI) is effective, trustworthy, and aligned with organizational goals. You should create cross-functional AI governance committees with diverse expertise in data science, ethics, and legal compliance to oversee XAI deployment. Clearly define roles and responsibilities related to explainability within project teams to ensure accountability and alignment. Foster collaboration between AI developers, business stakeholders, and end-users to customize explanations for different audiences. Incorporate XAI governance into existing risk management frameworks to ensure compliance and consistency. Adhere to core principles like providing meaningful, accurate, and context-aware explanations. Employ best practices such as transparent data use, interpretable models, and continuous validation to build reliable, user-friendly explanations that meet regulatory and operational needs.

Frequently Asked Questions

How Do Different User Groups Influence XAI Explanation Design?

You recognize that different user groups shape how explanations should be designed in XAI. Developers want detailed, scientific insights, while end-users need simple, relatable explanations. Domain experts and decision-makers require tailored, context-specific info. By understanding each group’s needs, you can create explanations that are clear, relevant, and effective. This targeted approach improves user trust, engagement, and system usability, ensuring everyone’s explanation expectations are met efficiently.

Can Explainability Compromise AI Model Performance or Security?

You might wonder if making AI models explainable affects their performance or security. It can, because adding transparency often requires simplifying models, which may reduce accuracy. Also, detailed explanations can expose vulnerabilities, leaking sensitive info or enabling attacks. While explainability boosts trust and debugging, it’s a delicate balance—you need enough transparency without compromising security or sacrificing too much performance.

What Are the Key Challenges in Standardizing XAI Evaluation Metrics?

You face multiple challenges when trying to standardize XAI evaluation metrics. Different stakeholders have diverse expectations, making consensus difficult. The complexity of AI models and the subjective nature of explainability complicate developing universal metrics. You also need to guarantee scalability, fairness, and adaptability across applications. Additionally, integrating insights from various disciplines and setting clear, accepted benchmarks remains tough, hindering consistent, reliable evaluation of explanations.

How Does XAI Address Biases in Machine Learning Models?

Did you know that biased AI models can lead to unfair decisions affecting millions? You can use XAI to uncover hidden biases by revealing how input features influence outcomes. It helps you identify discriminatory patterns, adjust models during training for fairness, and monitor decisions over time. With transparent explanations, you build trust, guarantee accountability, and foster responsible AI deployment that respects ethical standards and promotes equitable treatment for all users.

Are There Industry-Specific Best Practices for Implementing Explainable AI?

You’re asking about industry-specific best practices for implementing explainable AI. In your industry, prioritize interpretable models like decision trees or rule-based systems when possible. Incorporate post-hoc explanation tools for complex models, and guarantee transparency through thorough documentation, monitoring, and stakeholder communication. Tailor explanations to your audience—technical for data scientists, simple for end-users. Regularly review and update explanation methods to stay aligned with evolving regulations and industry standards.

Conclusion

As you step into the world of explainable AI, imagine peeling back the layers of a complex machine to reveal its inner workings. By embracing transparency, you build trust and illuminate the path forward. With each clear explanation, you turn a mysterious black box into a guiding lantern, lighting the way for responsible innovation. Together, you can shape AI that’s not only powerful but also understandable, forging a future where technology truly serves everyone.