TinyML allows your smart home devices to run machine learning locally on low-power processors, enabling faster and more secure automation. It helps devices process data, like voice commands or security footage, without relying on the cloud. This reduces latency, saves energy, and keeps your personal information safe. With ongoing innovations like AI co-processors and federated learning, TinyML is shaping smarter, more private homes. To discover how these advancements work, explore what’s next in this exciting field.

Key Takeaways

- TinyML enables smart home devices to process sensor data locally, ensuring faster responses and reduced latency.

- It enhances privacy by keeping sensitive data on the device, minimizing cloud reliance and data exposure risks.

- TinyML improves energy efficiency, allowing devices like security cameras and voice assistants to operate longer on low power.

- Hardware optimization techniques allow TinyML models to run efficiently on limited-memory microcontrollers in smart home gadgets.

- Future trends include federated learning for smarter, privacy-preserving updates and increased device autonomy in smart homes.

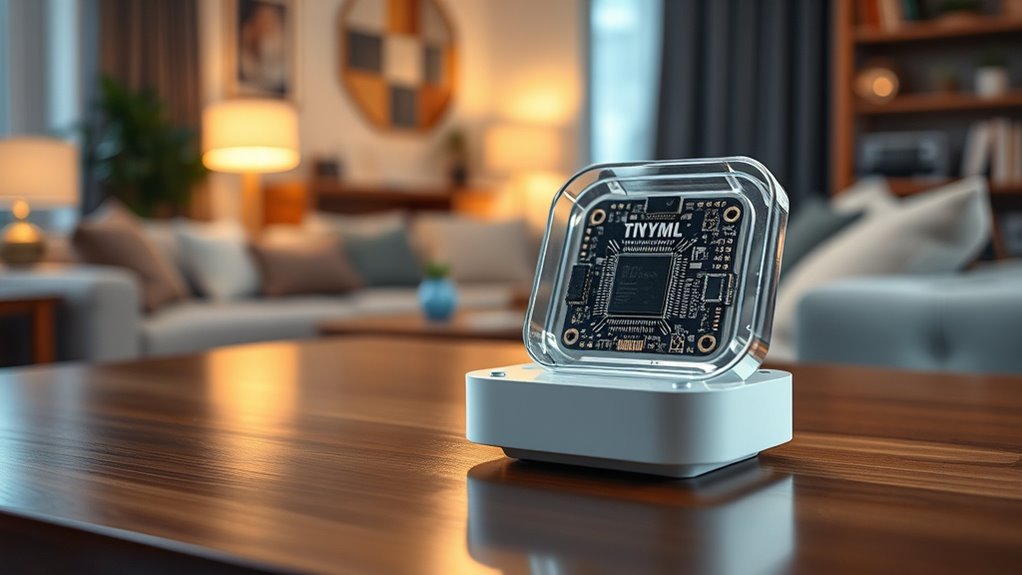

Understanding TinyML and Its Core Principles

Understanding TinyML involves recognizing how it brings machine learning directly to small, low-power devices like microcontrollers and embedded systems. It enables on-device sensor data analysis with power use in the milliwatt range or less, focusing on deploying simple yet effective models on resource-limited hardware. TinyML combines embedded systems and machine learning, emphasizing hardware, algorithms, and software optimized for minimal energy consumption. Its goal is to support always-on, real-time applications on battery-powered devices without relying on cloud services. These microcontrollers consume very little power, allowing long operation without frequent charging. By processing data locally, TinyML reduces energy costs and network data transmission. It enhances device responsiveness and privacy, making it ideal for applications like environmental sensing and user activity monitoring. Additionally, the development of high-quality, color-accurate projectors can further improve the user experience in smart home entertainment systems. As TinyML technology advances, it enables more sophisticated capabilities while maintaining low power profiles, making it increasingly suitable for a wide range of edge computing applications. The ongoing innovation in model optimization techniques ensures TinyML remains adaptable to various hardware constraints and use cases.

Furthermore, advancements in data security are crucial to ensure user privacy as more devices process sensitive information locally, especially given the growing importance of privacy protection in connected environments.

How TinyML Enables Real-Time Smart Home Automation

TinyML transforms smart home automation by enabling devices to process data locally, which results in faster response times and reduced latency. You’ll notice quicker reactions to commands, like voice control or gesture recognition, because devices analyze data instantly on-site. This local data analysis minimizes reliance on cloud connectivity, making responses more reliable and seamless. TinyML also enhances voice recognition, allowing your smart assistants to understand you efficiently and provide immediate feedback, creating a more natural experience. Reduced latency means interactions feel more instantaneous, whether adjusting your thermostat or turning on lights. Plus, TinyML’s integration with IoT devices ensures they work together smoothly, enabling multi-device control through a single interface. Additionally, local data processing ensures greater privacy and security for your household information. All these features combine to make your smart home more responsive, intuitive, and enjoyable.

Advantages of On-Device Data Processing for Privacy and Security

When you process data directly on your device, it stays local, reducing the risk of exposure to external threats. This approach minimizes data transfer, making breaches and unauthorized access less likely. By keeping data on the device, you enhance security and maintain greater control over your privacy. Additionally, as AI models become more advanced, implementing robust safety measures is essential to prevent vulnerabilities and ensure trustworthy operation. Incorporating device-specific tuning can further optimize performance and reliability, especially when mindful of security best practices to safeguard personal information. Employing encryption techniques can also strengthen data protection and prevent unauthorized access, further reinforcing your device’s security. Using crochet techniques for customization can also improve security by allowing users to personalize their devices securely.

Data Remains Local

Processing data directly on devices offers significant privacy and security benefits by keeping sensitive information local. When your smart home devices handle data on-site, there’s less dependence on remote servers, reducing the risk of data breaches. Local storage minimizes vulnerabilities and creates fewer access points for unauthorized users. It also makes it easier to comply with privacy regulations, as sensitive data stays within the device. Device-level encryption further protects your information from theft. Additionally, since data isn’t transmitted over the internet, there’s no chance of interception during transfer. This setup lowers the risk of server breaches and reduces reliance on insecure networks. Incorporating edge computing techniques can further enhance processing efficiency and responsiveness on your smart devices. Overall, keeping data on-device enhances your privacy, safeguards your information, and strengthens your smart home’s security, giving you peace of mind. Incorporating eye patches into your routine can also provide quick aesthetic benefits while maintaining skin health. Implementing TinyML technologies further optimizes processing efficiency by enabling intelligent features directly on devices without heavy power consumption, reducing latency and improving user experience.

Reduced Data Exposure

By handling data directly on your device, you considerably reduce the amount of information transmitted over networks, which enhances your privacy and security. Less data sent means fewer opportunities for interception or unauthorized access. Your sensitive information stays local, decreasing the risk of leaks and breaches. This approach also aligns with privacy regulations, helping you stay compliant. Since personal data isn’t shared externally, the chance of exposure to malware or man-in-the-middle attacks drops remarkably. Even if data needs to be transmitted, it’s minimal and can be encrypted, further protecting your privacy. With fewer transmissions, your devices are less vulnerable to network exploits, making your smart home environment more secure. Additionally, Kia Tuning techniques such as ECU remapping and performance upgrades demonstrate how localized processing can optimize vehicle performance without exposing sensitive information externally. Employing secure communication protocols further ensures that any necessary data transfer remains protected from prying eyes. The integration of edge computing in smart devices allows for more efficient data handling, reducing latency and dependence on cloud services. In fact, advancements in on-device machine learning are making these processes even more seamless and secure. Overall, on-device processing gives you greater control over your data, reducing risks associated with data interception and exposure. Incorporating sound design techniques into device alerts or notifications can also enhance user experience while maintaining security.

Enhanced Device Security

Have you considered how on-device data processing enhances your device’s security and privacy? When your smart home devices process data locally, sensitive information stays within the device, reducing the risk of breaches and safeguarding your confidentiality. This approach also helps you stay compliant with privacy laws like GDPR, since data doesn’t need to be sent elsewhere. A self-contained system minimizes the transmission of data, which is crucial for maintaining security. Additionally, implementing vertical storage solutions can help organize data and reduce clutter, further strengthening security protocols. TinyML enables real-time threat detection, allowing your devices to quickly identify and respond to security issues. It also makes your devices more energy-efficient and cost-effective by minimizing data transmission. Plus, local processing limits the attack surface, making hacking more difficult. Incorporating security best practices into your device design can further enhance protection against potential vulnerabilities. Moreover, adopting encryption techniques can bolster data security during processing and storage. As the industry becomes more aware of industry transformations, these capabilities will be essential for maintaining a competitive edge. With these capabilities, your smart home becomes more secure, private, and reliable, even without constant internet access.

Impact of TinyML on Energy Efficiency and Battery Life in Smart Devices

TinyML considerably enhances energy efficiency and battery life in smart devices by enabling them to perform complex tasks with minimal power consumption. Since TinyML models run on ultra-low power microcontrollers, they considerably reduce energy use, extending battery lifespan. This efficiency makes devices more practical for long-term, autonomous operation, even in remote or off-grid locations. TinyML’s low power requirements also allow compatibility with solar power, further decreasing reliance on batteries. Reduced energy consumption means less frequent battery replacements or recharges, cutting maintenance and costs. Additionally, lower power use helps minimize environmental impact by decreasing electronic waste and energy demand. Overall, TinyML empowers smart home devices to operate longer and more reliably while supporting environmentally friendly technology practices.

Overcoming Hardware and Model Optimization Challenges

Despite TinyML’s energy benefits, deploying effective models on resource-limited devices presents significant hurdles. You need to reduce model size without sacrificing accuracy, often using pruning, quantization, or knowledge distillation. But high compression can degrade performance, so tuning is essential. Compatibility with hardware features like specialized instruction sets is critical for real-time inference. Memory constraints, often under 1MB, force you to optimize memory management through layer fusion or weight sharing. Hardware heterogeneity adds complexity, requiring tailored models for different devices. Here’s a quick overview:

| Challenge | Solution |

|---|---|

| Model size and accuracy loss | Pruning, quantization, distillation |

| Memory and storage limitations | Layer fusion, static memory layouts |

| Hardware diversity | Hardware-specific optimizations |

Practical Applications of TinyML in Voice Recognition and Security Systems

Implementing voice recognition and security systems directly on edge devices has become increasingly practical with TinyML, enabling real-time processing without relying on cloud services. This approach boosts privacy, as voice data stays on the device, and offers offline functionality, ensuring constant availability. For example:

- Microcontrollers like ESP32 support TinyML-powered voice assistants that control lights, appliances, and sensors via voice commands.

- TinyML-equipped security cameras analyze video locally, distinguishing between harmless motion and intruders, reducing false alarms.

- Integration with smart locks and doorbells allows real-time authentication and threat detection, enhancing overall home security.

These applications improve responsiveness, safeguard personal data, and provide dependable, privacy-conscious smart home systems.

The Role of Quantization and Pruning in TinyML Model Development

Quantization and pruning are key techniques to shrink TinyML models without sacrificing too much accuracy. By reducing model size and complexity, they enable deployment on resource-constrained devices like microcontrollers. Exploring how these methods optimize performance can help you build more efficient and effective TinyML applications.

Model Size Reduction

Have you ever wondered how tinyML models fit into resource-constrained devices? Model size reduction is key. Techniques like quantization lower the precision of weights from floating-point to integers, shrinking the model without major accuracy loss. Pruning removes redundant weights or neurons, maintaining performance while reducing size. You can also develop custom models optimized for size or leverage pre-existing lightweight models like MobileNet.

To deepen your understanding:

- Quantization reduces model size through precision reduction, speeding inference.

- Pruning creates sparse models by removing less important components, preserving accuracy.

- Iterative optimization combines methods for maximum size reduction with minimal accuracy impact.

These techniques enable deployment on smart home devices, making them more efficient and capable.

Maintaining Accuracy

Ever wondered how TinyML models maintain high accuracy despite their reduced size? The key lies in techniques like quantization and pruning. Quantization, especially quantization-aware training (QAT), compresses model parameters to save space and energy without sacrificing accuracy. Post-training quantization (PTQ) simplifies deployment by applying quantization after training. Pruning removes redundant parts—either entire neurons or individual connections—making models leaner while preserving essential functionality. Both methods help maintain accuracy during compression. These techniques enable real-time processing, vital for smart home devices, by optimizing models for specific hardware. They also allow models to balance efficiency and performance through iterative refinement and device-specific tuning. Ultimately, quantization and pruning ensure your smart home devices stay fast, accurate, and energy-efficient.

Optimization Techniques

Optimization techniques like quantization and pruning are essential for developing efficient TinyML models that perform well on resource-constrained devices. Quantization reduces model size and computational load, enabling models to run on microcontrollers with limited memory. It also lowers power consumption, which is vital for energy-efficient devices. Pruning removes unnecessary neurons or connections, decreasing model complexity and increasing inference speed. These techniques can be combined for maximum efficiency. To deepen your understanding, consider these key points:

- Quantization often uses low-bit precision, such as less than 8 bits, to save resources.

- Pruning can be structured, removing entire neurons, or unstructured, removing individual weights.

- Integrating quantization and pruning maximizes model compression while maintaining accuracy.

Future Trends: AI Co-Processors and Federated Learning in Smart Homes

As smart home technology advances, AI co-processors like Kneron’s KL520 and KL720 are becoming essential for enabling powerful, energy-efficient on-device AI. The KL520 powers devices like locks, doorbells, and cameras with minimal energy, supporting long battery life—up to 15 months on 8 AA batteries. The KL720 offers higher performance per watt, handling tasks like 4K image processing and natural language understanding for high-end IP cameras and smart TVs. These processors reduce latency and reliance on cloud connectivity, enabling real-time facial recognition, gesture control, and voice commands. Federated learning complements this by allowing devices to collaboratively train models without sharing raw data, preserving privacy. Together, AI co-processors and federated learning drive smarter, more secure, and private smart homes.

Managing Deployment and Updates Across Multiple Devices

Managing deployment and updates across multiple smart home devices requires streamlined processes that guarantee security, reliability, and minimal user disruption. You need efficient provisioning protocols that enable secure, automated onboarding without confusing users. Using secure authentication methods like mutual TLS or certificates ensures each device is uniquely trusted within your ecosystem. Zero-touch provisioning allows devices to self-configure by securely fetching profiles from cloud or local servers, reducing manual work. To deepen management, consider:

Streamline smart home device deployment with secure, automated onboarding and reliable over-the-air updates.

- Implementing firmware over-the-air (FOTA) updates with differential methods to save bandwidth and time.

- Incorporating robust rollback features to maintain device functionality if updates fail.

- Scheduling updates and staggering rollouts to prevent network congestion and ensure stability across all devices.

These strategies keep your smart home ecosystem secure, up-to-date, and smoothly functioning.

Expanding TinyML’s Role in Smart Home Innovation and Industry Growth

TinyML is accelerating device autonomy, allowing smart home systems to operate more independently and respond instantly to user needs. As adoption grows, industries recognize its potential to streamline operations and reduce costs. This expansion not only fuels innovation but also gives companies a competitive edge in the evolving smart home market.

Accelerating Device Autonomy

Accelerating device autonomy through TinyML transforms smart home innovation by enabling devices to process data locally in real time. This allows for immediate, context-aware responses, enhancing functionality like voice recognition and motion detection. With on-device data analysis, you reduce latency and rely less on cloud processing. This approach also cuts bandwidth needs by sending only essential data, making systems more cost-effective and reliable in connectivity-limited environments.

To deepen this autonomy, consider these factors:

- Efficient on-device processing enables faster decision-making and uninterrupted operation, even with poor network signals.

- Power-efficient models extend device lifespan, reducing maintenance and enabling long-term operation on small batteries.

- Local data handling improves privacy and security by minimizing exposure of sensitive information during transmission.

Driving Industry Adoption

The rapid advancements in TinyML technology are fueling broader industry adoption, especially in the smart home sector. Major semiconductor companies like STMicroelectronics and Sony develop TinyML hardware tailored for smart devices, boosting AI capabilities without draining energy. Collaborations between AI platform providers such as Edge Impulse and chipmakers accelerate TinyML integration into wearables, consumer electronics, and sensors, expanding IoT ecosystems. Tech giants like Google and Arm partner with manufacturers to create optimized microcontroller solutions, making TinyML more accessible. Industry alliances focus on blending cloud services with edge processing to improve device independence and network efficiency, fostering scalable innovation. Additionally, startups simplify TinyML development with platforms and tools, reducing time-to-market and encouraging widespread adoption in smart home products.

Frequently Asked Questions

How Does Tinyml Compare With Traditional Cloud-Based AI Solutions?

You might wonder how TinyML stacks up against traditional cloud-based AI solutions. TinyML runs lightweight models directly on small devices, offering fast, real-time responses with low power use and no need for constant internet. Cloud AI, on the other hand, handles complex tasks remotely, providing broader analytics but with higher latency and privacy concerns. TinyML’s local processing makes it ideal for smart home devices needing quick, secure, offline operation.

What Are the Typical Costs Associated With Implementing Tinyml in Smart Homes?

When it comes to costs, implementing TinyML is like planting seeds for future savings. You’ll face upfront expenses for custom development, specialized skills, and hardware choices. Ongoing costs include maintenance, updates, and energy use. However, you’ll save on cloud services, reduce bandwidth, and extend device lifespan. Overall, initial investments may be high, but the long-term benefits make TinyML a smart investment for scalable, cost-efficient smart home solutions.

Can Tinyml Adapt to New Data or Changing Environments Over Time?

You might wonder if TinyML can adapt to new data or changing environments over time. It’s limited in full model retraining due to hardware constraints, but you can use techniques like incremental learning, sensor fusion, and dynamic thresholds to improve adaptability. Updates are often delivered via OTA, with delta updates and model version control. While not as flexible as larger systems, TinyML still offers effective, ongoing environmental adjustments at the edge.

What Security Risks Are Unique to Tinyml-Enabled Smart Home Devices?

Like the Trojan Horse, tinyML-enabled smart home devices hide vulnerabilities within their small size. You face unique risks such as limited security protocols, making them prone to tampering, side-channel attacks, and theft of sensitive models. Their network connections open doors for hacking, while physical access allows malicious hardware or data manipulation. You must stay vigilant, understanding that their resource constraints can’t fully defend against these evolving, subtle threats.

How Accessible Is Tinyml Development for Hobbyists and Small Manufacturers?

You’ll find TinyML development quite accessible if you’re a hobbyist or small manufacturer. Affordable microcontrollers like Arduino and specialized kits simplify hardware setup. Free platforms like Google Colab and open-source frameworks such as TensorFlow Lite make software development manageable on modest computers. Industry trends show growing support, with plenty of tutorials, community projects, and collaborations, making it easier for you to experiment, learn, and create TinyML-powered devices without hefty costs or advanced expertise.

Conclusion

As you delve into the world of TinyML, imagine it as the silent engine powering your smart home’s heartbeat—delivering intelligence without sacrificing privacy or energy. By embracing TinyML’s innovations, you’re not just upgrading devices; you’re shaping a future where your home’s technology is smarter, faster, and more secure. It’s like planting seeds today that will blossom into a smarter, more connected tomorrow—where every device works in harmony, quietly and efficiently.